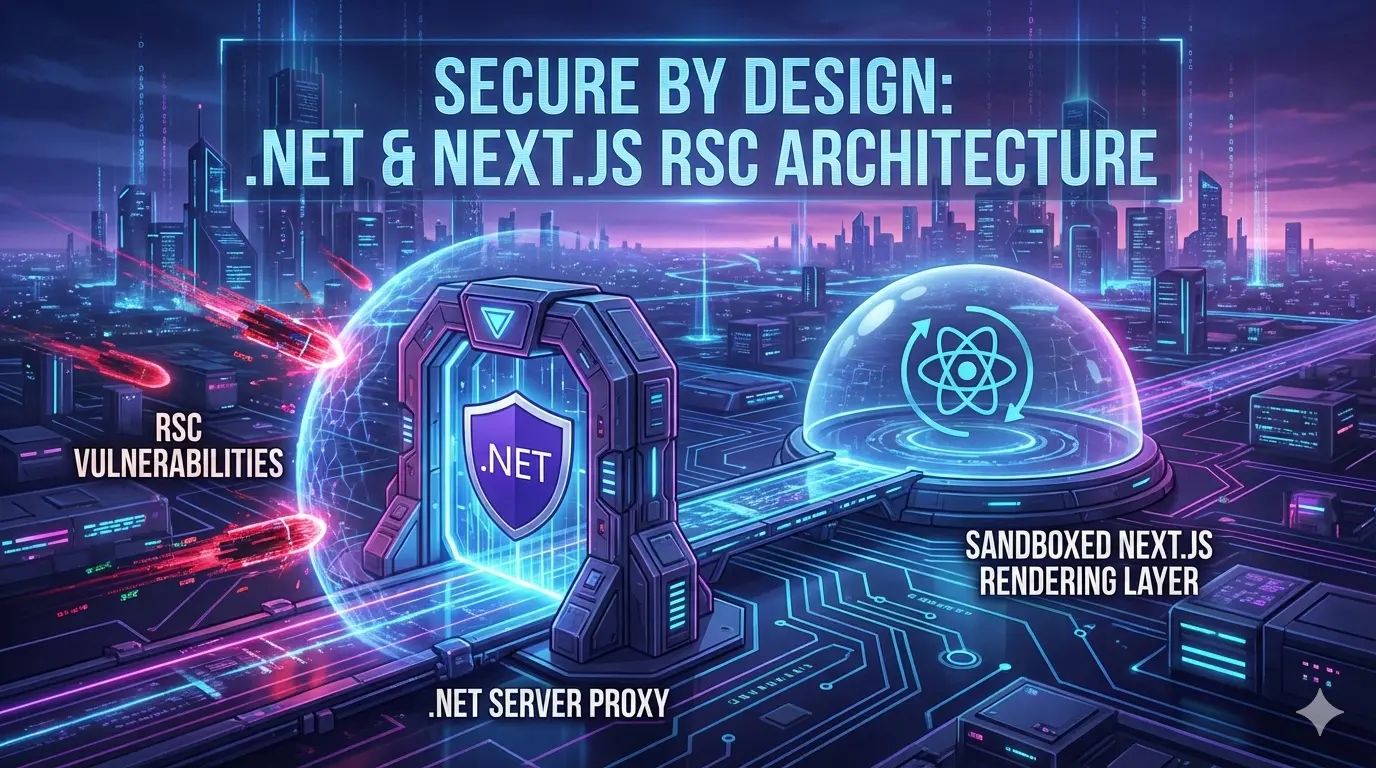

How the Next.js RSC template architecture protects against React Server Components vulnerabilities through process isolation and defense-in-depth security

Demis Bellot

Demis Bellot

More from the blog

Article

New React SPA Template

Explore the new enhanced Vite TypeScript React SPA template for .NET 8

Demis Bellot

11 min read

llms.py UI

Simple ChatGPT-like UI to access ALL Your LLMs, Locally or Remotely!

Demis Bellot

8 min read

ServiceStack started development in 2008 with the mission of creating a best-practices services framework with an emphasis on simplicity and speed

more information