Text to Blazor CRUD upgraded to use best LLMs

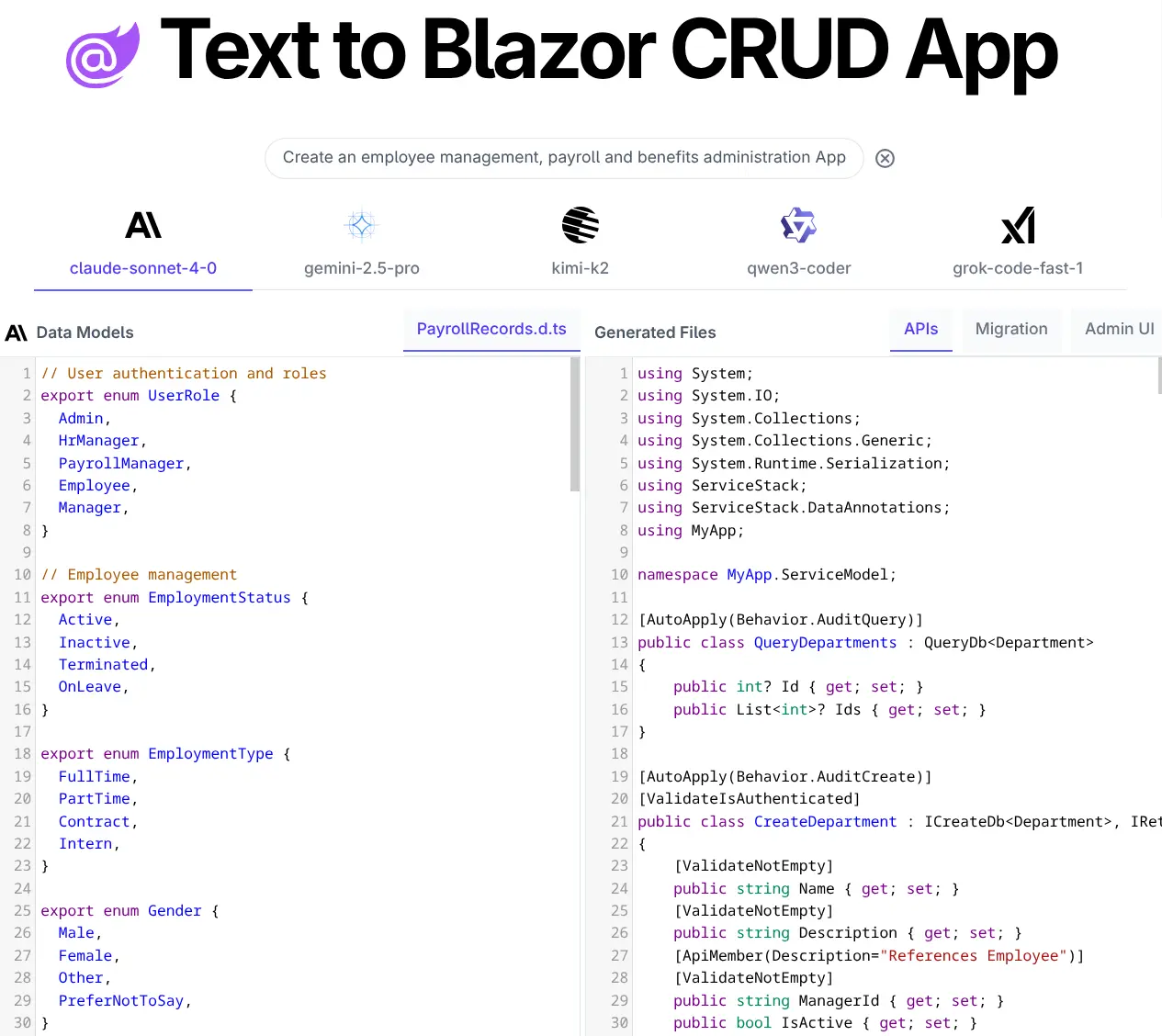

Text to Blazor has upgraded to use the best coding LLMs from Anthropic, Google, Moonshot AI, Alibaba and xAI to power its app generation capabilities to create new Blazor Admin CRUD Apps from just a text description.

Just enter in the type of App you want to create or the Data Models it needs and it will generate a new Blazor CRUD App for you.

This will query 5 different high quality AI models to generate 5 different Data Models, APIs, DB Migrations and Admin UIs which you can browse to find the one that best matches your requirements.

Using AI to only generate Data Models

Whilst the result is a working CRUD App, the approach taken is very different from most AI tools which uses AI to generate the entire App that ends up with a whole new code-base developers didn't write which they'd now need to maintain.

Instead AI is only used to generate the initial Data Models within a TypeScript Declaration file which we've found is the best format supported by AI models that's also the best typed DSL for defining data models with minimal syntax that's easy for humans to read and write.

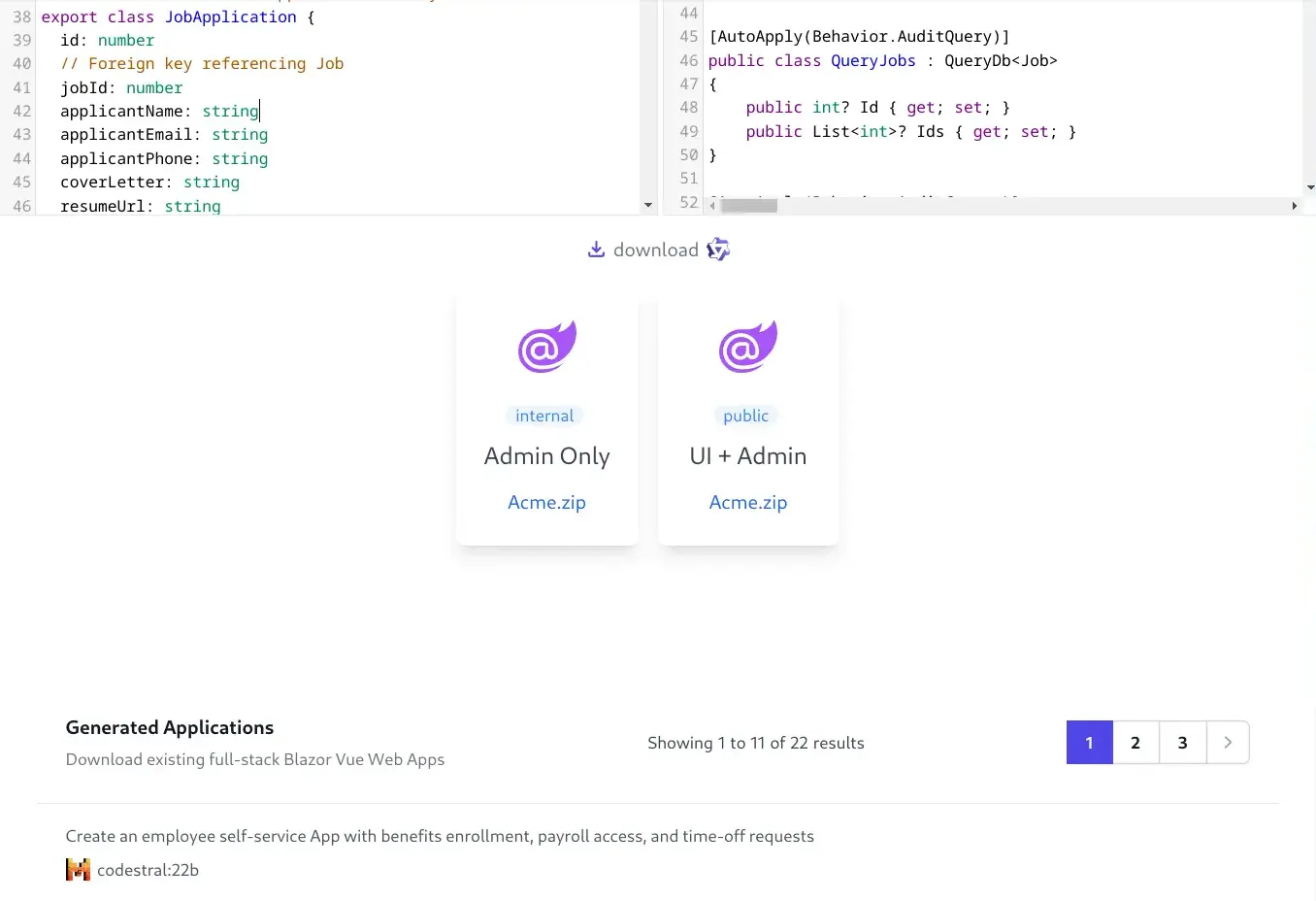

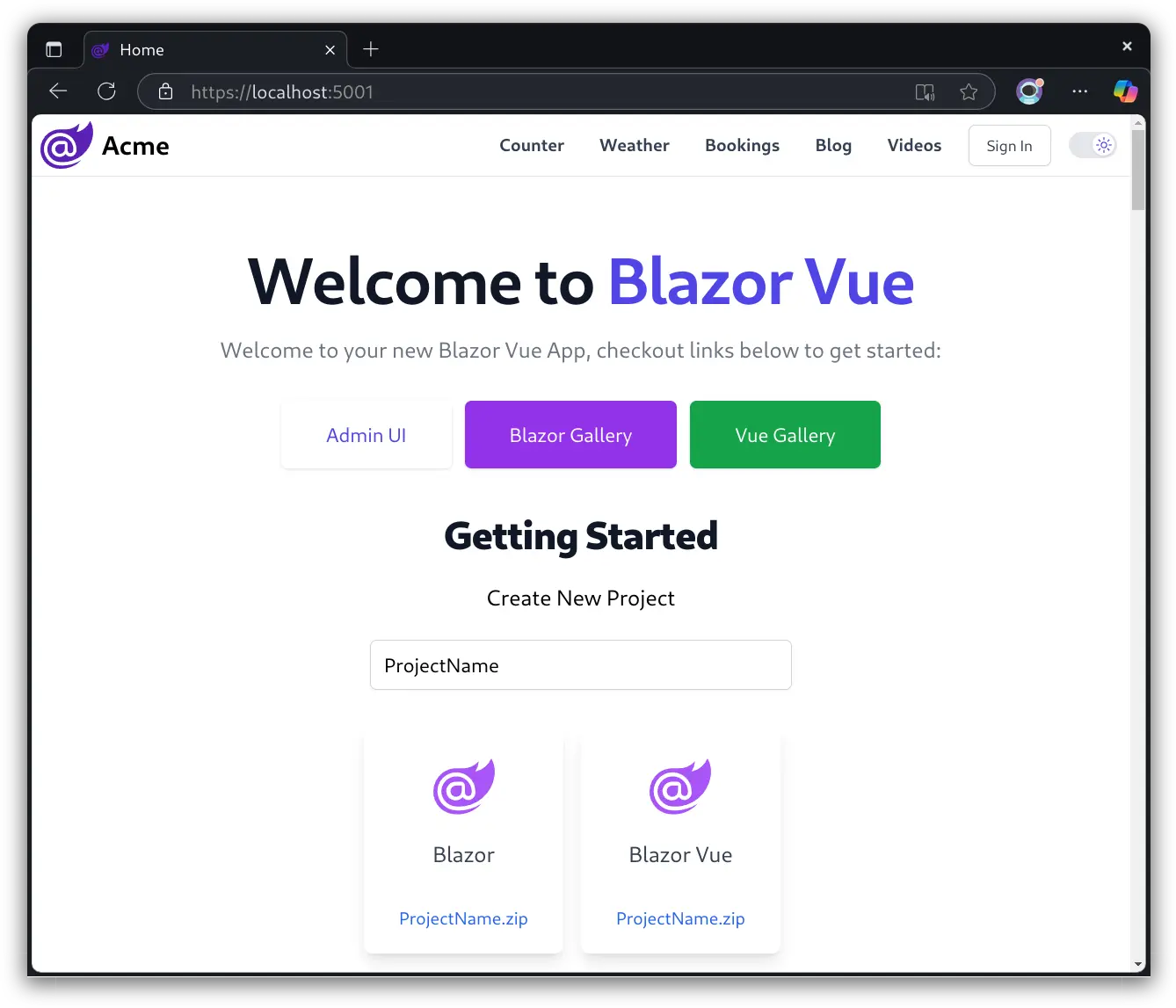

Download preferred Blazor Vue CRUD App

Once you've decided on the Data Models that best matches your requirements, you can download your preferred generated Blazor Vue CRUD App:

Blazor Admin App

Admin Only - ideal for internal Admin Apps where the Admin UI is the Primary UI

Blazor Vue App

UI + Admin - Creates a new blazor-vue template ideal for Internet or public facing Apps, sporting a full-featured public facing UI for a Web App's users whilst enabling a back-office CRUD UI for Admin Users to manage their data.

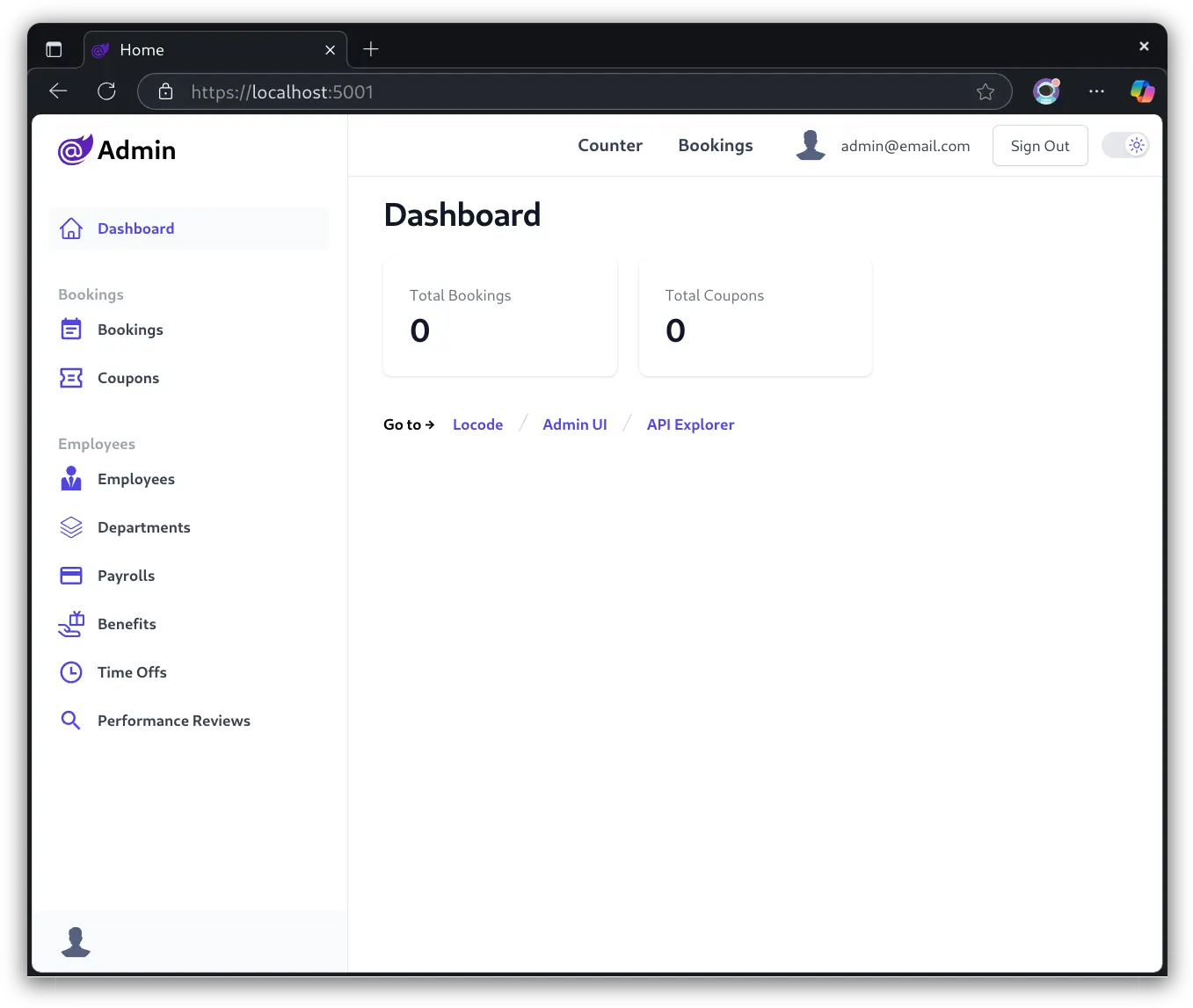

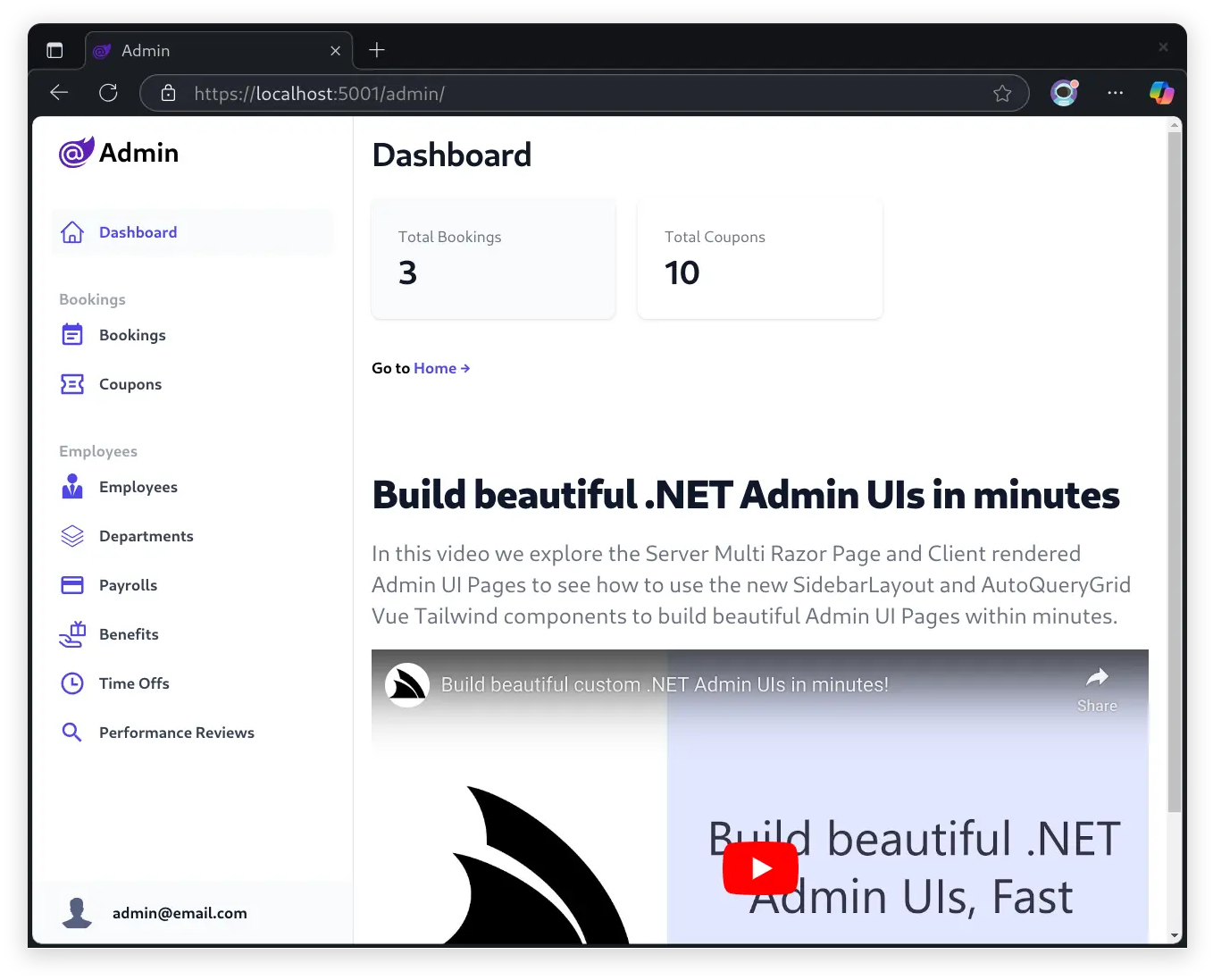

Clicking on the Admin UI button will take you to the Admin UI at /admin:

Run Migrations

In order to create the necessary tables for the new functionality, you'll need to run the DB Migrations.

If migrations have never been run before, you can run the migrate npm script to create the initial database:

npm run migrate

If you've already run the migrations before, you can run the rerun:last npm script to drop and re-run the last migration:

npm run rerun:last

Alternatively you can nuke the App's database (e.g. App_Data/app.db) and recreate it from scratch with npm run migrate.

Instant CRUD UI

After running the DB migrations, you can hit the ground running and start using the Admin UI to manage the new Data Model RDBMS Tables:

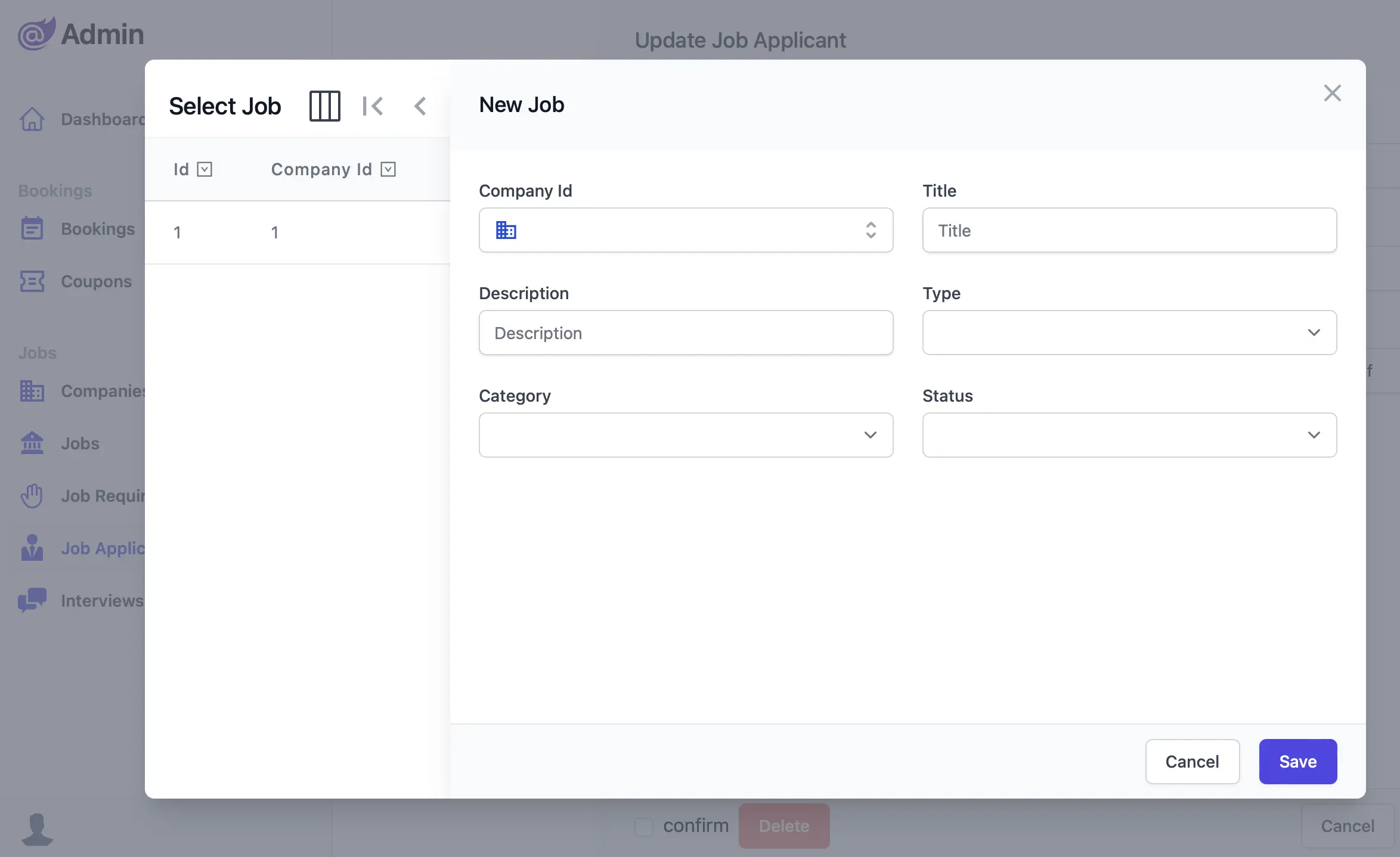

Create new Records from Search Dialog

We're continually improving the UX of the AutoQueryGrid Component used in generating CRUD UIs to enable a more productive and seamless workflow. A change added to that end that you can see in the above video is the ability to add new Records from a Search dialog:

This now lets you start immediately creating new records without needing to create any lookup entries beforehand.

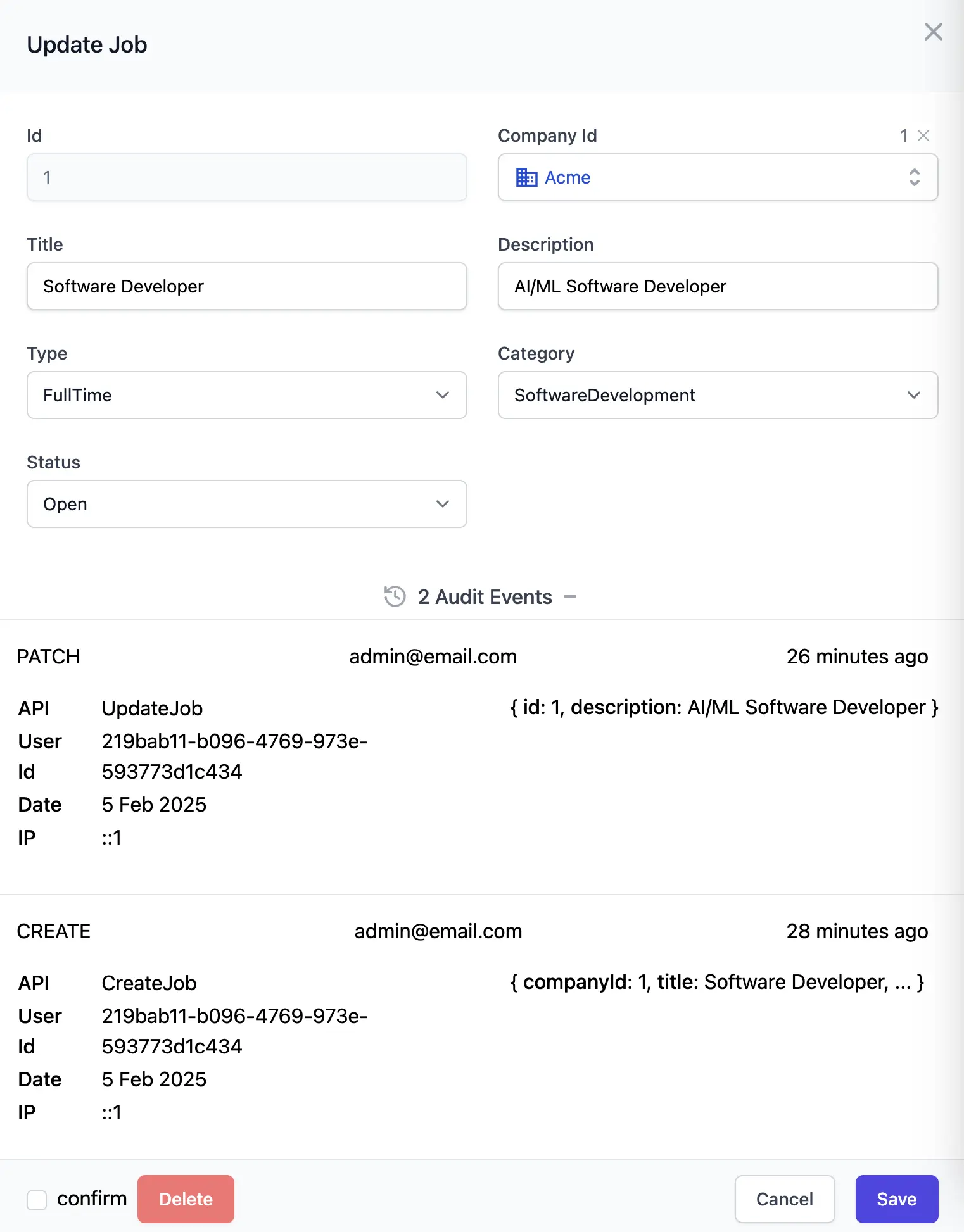

Audited Data Models

The TypeScript Data Models enable a rapid development experience for defining an App's Data Models which are used to generate the necessary AutoQuery CRUD APIs to support an Admin UI.

An example of the productivity of this approach is the effortless support for maintaining a detailed audit history for changes to select tables by inheriting from the AuditBase base class, e.g:

export class Job extends AuditBase {

...

}

Which can then be regenerated using the name of the TypeScript Model definitions:

npx okai Jobs.d.ts

This will include additional CreatedBy, CreatedDate, ModifiedBy, ModifiedDate, DeletedBy and DeletedDate

properties to the specified Table and also generates the necessary

Audit Behaviors

on the AutoQuery APIs to maintain the audit history for each CRUD operation.

AutoQuery CRUD Audit Log

As the blazor-admin and blazor-vue templates are configured to use the AutoQuery CRUD Executable Audit Log in its Configure.AutoQuery.cs the Audit Behaviors will also maintain an Audit Trail of all CRUD operations which can be viewed in the Admin UI:

FREE Access to worlds most popular AI Models

In addition to upgrading the models in text-to-blazor we've also made available them available to our generic chat prompt that can be used as a convenient way to conduct personal research against many of the worlds most popular Large Language Models - for Free!

No API Keys, no Signups, no installs, no cost, you can just start immediately using the npx okai chat script to ask LLMs

for assistance:

npx okai chat "command to copy a folder with rsync?"

This will use the default model (currently gemini-flash 2.5) to answer your question.

Select Preferred Model

You can also use your preferred model with the -m <model> flag with either the model name or its alias,

e.g you can use

Microsoft's PHI-4 14B model with:

npx okai -m phi chat "command to copy folder with rsync?"

List Available Models

We're actively adding more great performing and leading experimental models as they're released.

You can view the list of available models with ls models:

npx okai ls models

Which at this time will return the following list of available models along with instructions for how to use them:

USAGE (5 models max):

a) OKAI_MODELS=codestral,llama3.3,flash

b) okai -models codestral,llama3.3,flash <prompt>

c) okai -m flash chat <prompt>

FREE MODELS:

claude-3-haiku (alias haiku)

codestral:22b (alias codestral)

deepseek-chat-v3.1:671b

deepseek-r1:671b (alias deepseek-r1)

deepseek-v3.1:671b (alias deepseek)

gemini-2.5-flash (alias flash)

gemini-2.5-flash-lite (alias flash-lite)

gemini-flash-thinking-2.5

gemma2:9b

gemma3:27b (alias gemma)

glm-4.5

glm-4.5-air

glm-4.5v

gpt-4.1-mini

gpt-4.1-nano

gpt-4o-mini

gpt-5-mini

gpt-5-nano

gpt-oss-120b

gpt-oss-20b

grok-code-fast-1 (alias grok-code)

kimi-k2

llama-4-maverick

llama-4-scout

llama3.1:70b

llama3.1:8b

llama3.3:70b (alias llama3)

llama4:109b (alias llama4)

llama4:400b

mistral-nemo:12b (alias mistral-nemo)

mistral-small:24b (alias mistral-small)

mistral:7b (alias mistral)

mixtral:8x22b (alias mixtral)

nova-lite

nova-micro

phi-4:14b (alias phi,phi-4)

qwen3-coder

qwen3-coder:30b

qwen3:235b

qwen3:30b

qwen3:32b

qwen3:8b

PREMIUM MODELS: *

claude-3-5-haiku

claude-3-5-sonnet

claude-3-7-sonnet

claude-3-sonnet

claude-sonnet-4-0 (alias sonnet)

gemini-2.5-pro (alias gemini-pro)

gpt-4.1

gpt-4o

gpt-5

gpt-5-chat

grok-4 (alias grok)

mistral-large:123b

nova-pro

o4-mini

o4-mini-high

* requires valid license:

a) SERVICESTACK_LICENSE=<key>

b) SERVICESTACK_CERTIFICATE=<LC-XXX>

c) okai -models <premium,models> -license <license> <prompt>

Where you'll be able to use any of the great performing inexpensive models listed under FREE MODELS for Free.

Whilst ServiceStack customers with an active commercial license can also use any of the more expensive

and better performing models listed under PREMIUM MODELS by either:

- Setting the

SERVICESTACK_LICENSEEnvironment Variable with your License Key - Setting the

SERVICESTACK_CERTIFICATEVariable with your License Certificate - Inline using the

-licenseflag with either the License Key or Certificate

FREE for Personal Usage

To be able to maintain this as a free service we're limiting usage as a tool that developers can use for personal assistance and research by limiting usage to 60 requests /hour which should be more than enough for most personal usage and research whilst deterring usage in automated tools.

info

Rate limiting is implemented with a sliding Token Bucket algorithm that replenishes 1 additional request every 60s