AI Chat - A Customizable, Private, ChatGPT-like UI

AI Chat UI

Another major value proposition of AI Chat is being able to offer a ChatGPT-like UI to your users where you're able to control the API Keys, billing, and sanctioned providers your users can access to maintain your own Fast, Local, and Private access to AI from within your own organization.

Install

AI Chat can be added to any .NET 8+ project by installing the ServiceStack.AI.Chat NuGet package and configuration with:

x mix chat

Which drops this simple Modular Startup that adds the ChatFeature

and registers a link to its UI on the Metadata Page if you want it:

public class ConfigureAiChat : IHostingStartup

{

public void Configure(IWebHostBuilder builder) => builder

.ConfigureServices(services => {

services.AddPlugin(new ChatFeature());

services.ConfigurePlugin<MetadataFeature>(feature => {

feature.AddPluginLink("/chat", "AI Chat");

});

});

}

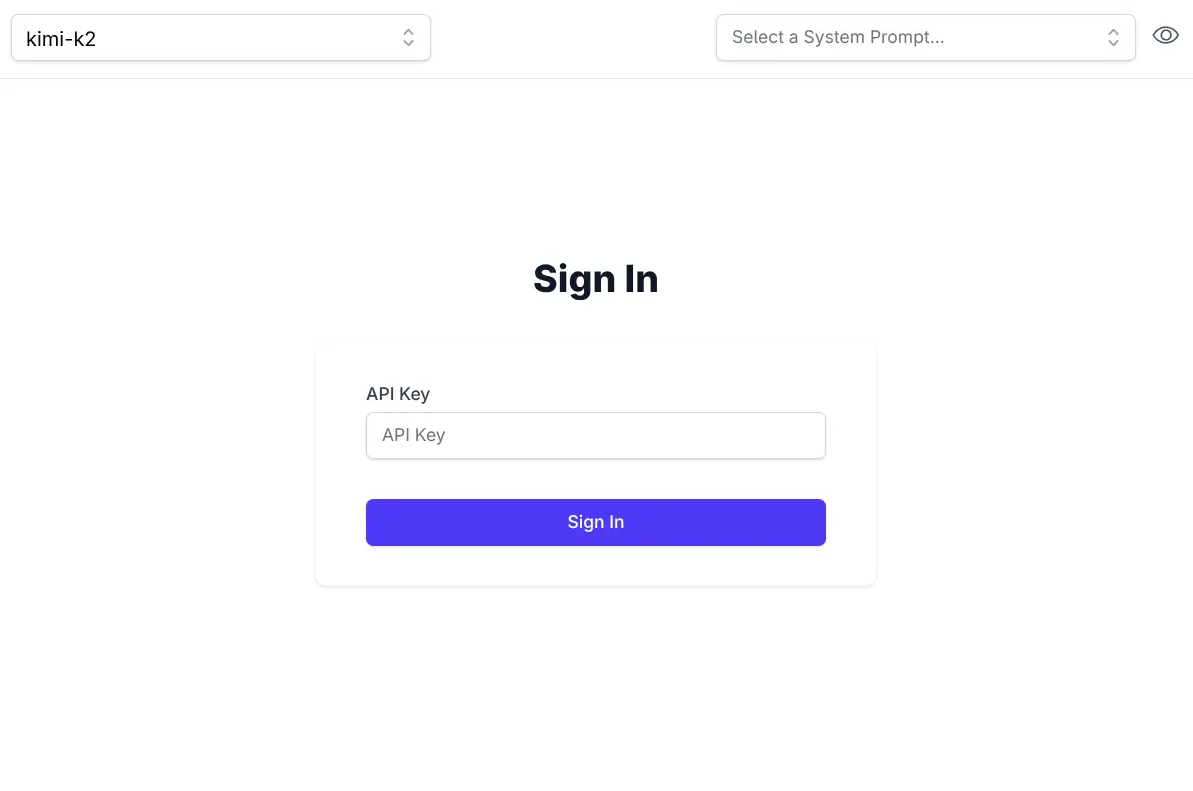

Identity Auth or Valid API Key

AI Chat makes of ServiceStack's new API Keys or Identity Auth APIs which allows usage for both Authenticated Identity Auth users otherwise unauthenticated users will need to provide a valid API Key:

If needed ValidateRequest can be used to further restrict access to AI Chat's UI and APIs, e.g. you can restrict access

to API Keys with the Admin scope with:

services.AddPlugin(new ChatFeature {

ValidateRequest = async req =>

req.GetApiKey()?.HasScope(RoleNames.Admin) == true

? null

: HttpResult.Redirect("/admin-ui"),

});

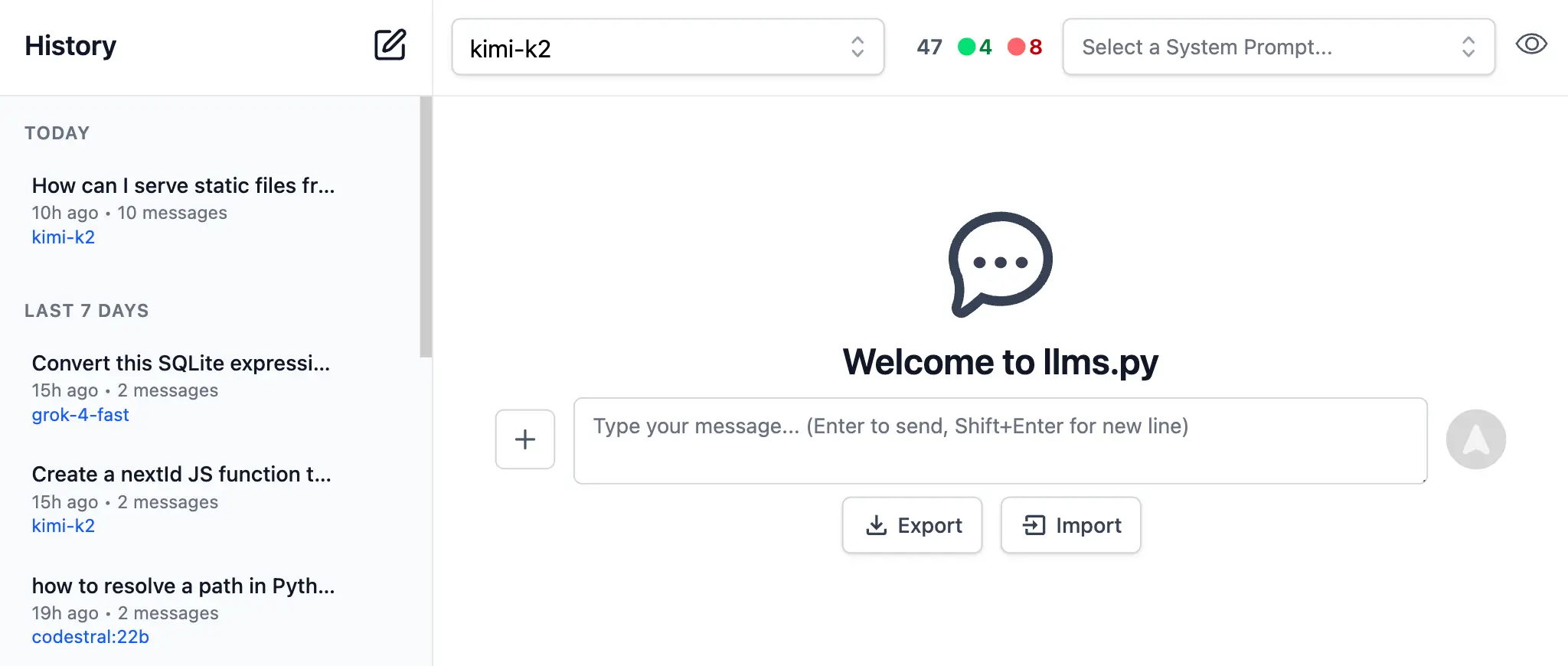

Import / Export

All data is stored locally in the users local browser's IndexedDB. When needed you can backup and transfer your entire chat history between different browsers using the Export and Import features on the home page.

Simple and Flexible UI

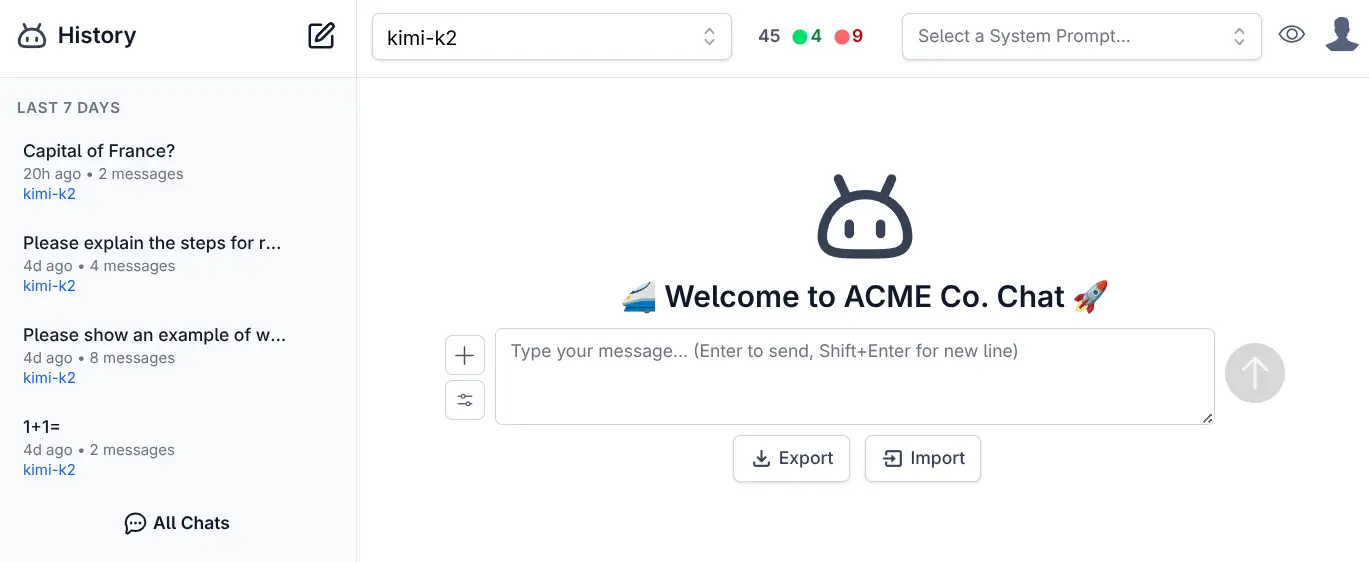

Like all of ServiceStack's built-in UIs, AI Chat is also naturally customizable where you can override any of AI Chat's Vue Components and override them with your own by placing them in your /wwwroot/chat folder:

Where you'll be able to customize the appearance and behavior of AI Chat's UI to match your App's branding and needs.

Customize

The built-in ui.json configuration can be overridden with your own to use your preferred system prompts and other defaults by adding them to your local folder:

Alternatively ConfigJson and UiConfigJson can be used to load custom JSON configuration from a different source, e.g:

services.AddPlugin(new ChatFeature {

// Use custom llms.json configuration

ConfigJson = vfs.GetFile("App_Data/llms.json").ReadAllText(),

// Use custom ui.json configuration

UiConfigJson = vfs.GetFile("App_Data/ui.json").ReadAllText(),

});

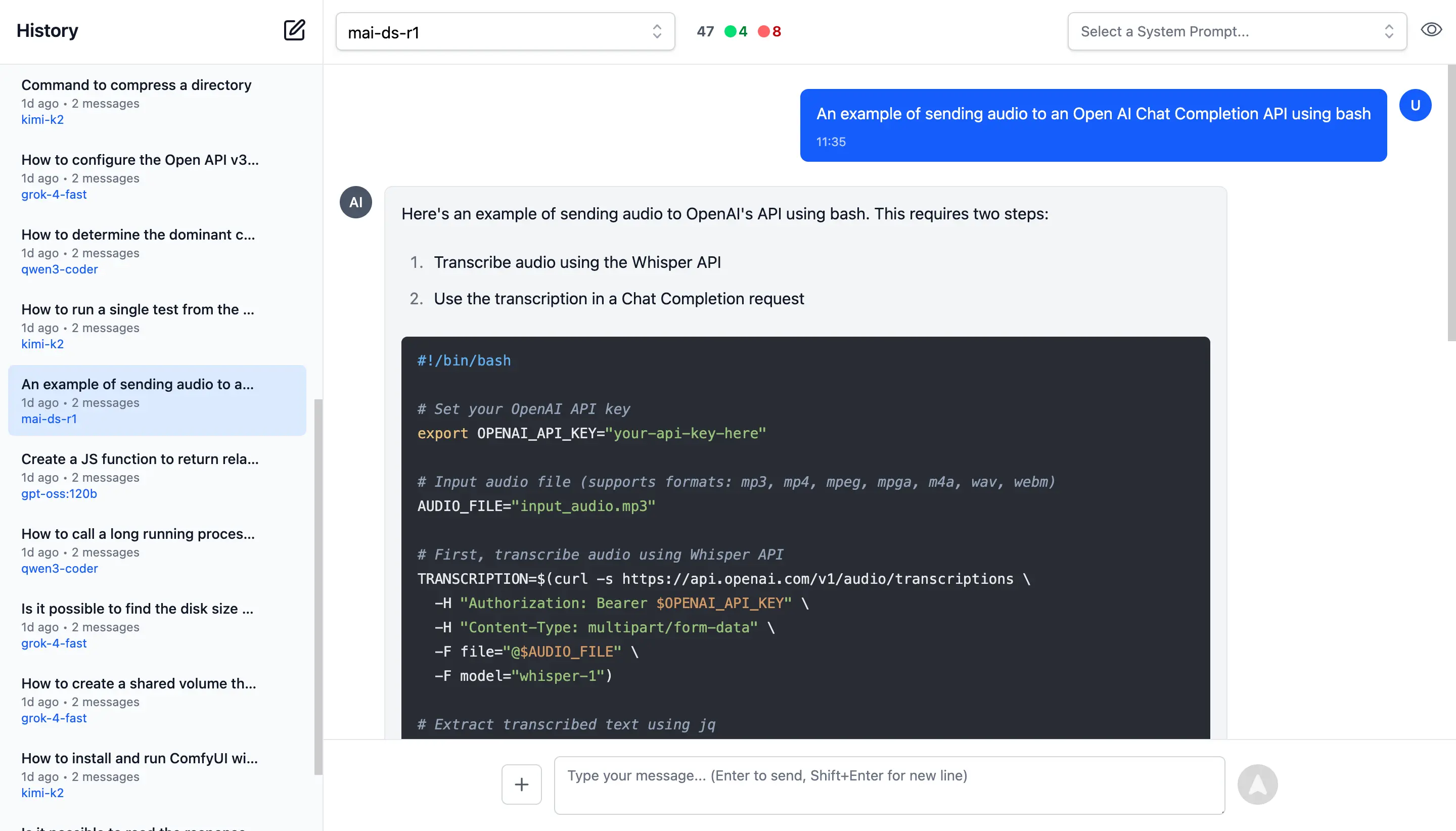

Rich Markdown & Syntax Highlighting

To maximize readability there's full support for Markdown and Syntax highlighting for the most popular programming languages.

To quickly and easily make use of AI Responses, Copy Code icons are readily available on hover of all messages and code blocks.

Rich, Multimodal Inputs

The Chat UI goes beyond just text and can take advantage of the multimodal capabilities of modern LLMs with support for Image, Audio, and File inputs.

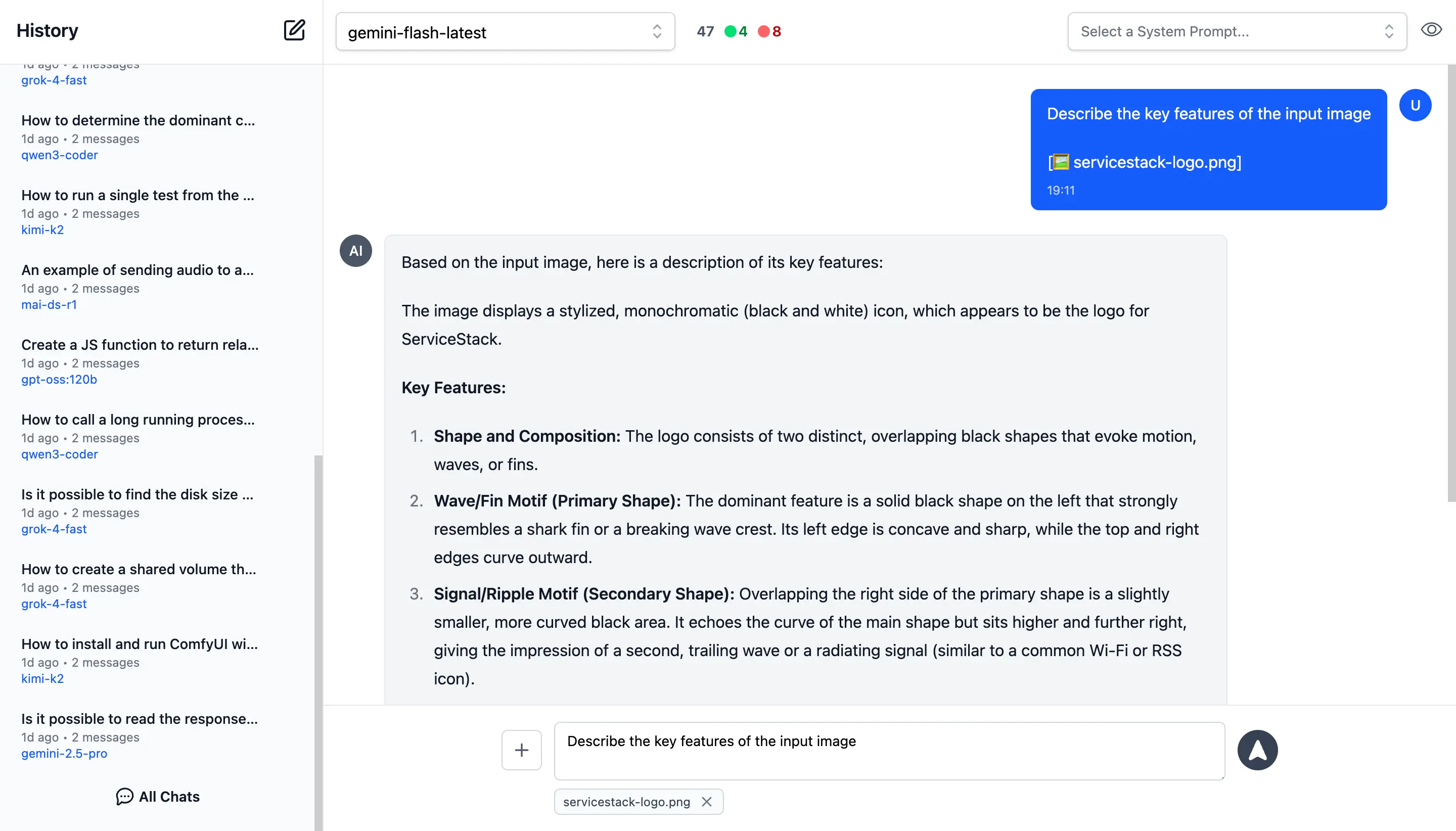

🖼️ 1. Image Inputs & Analysis

Images can be uploaded directly into your conversations with vision-capable models for comprehensive image analysis.

Visual AI Responses are highly dependent on the model used. This is a typical example of the visual analysis provided by the latest Gemini Flash of our ServiceStack Logo:

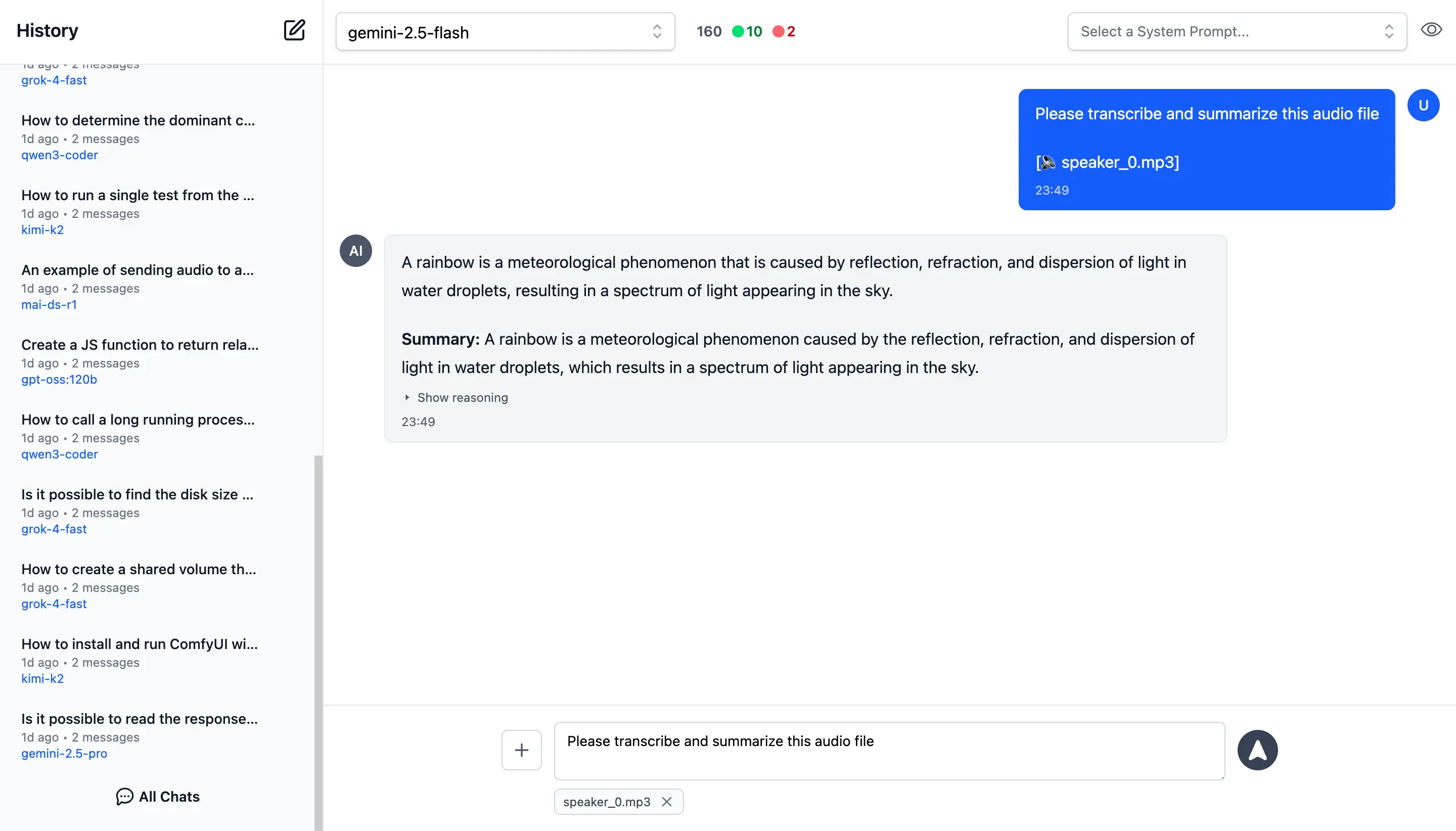

🎤 2. Audio Input & Transcription

Likewise you can upload Audio files and have them transcribed and analyzed by multi-modal models with audio capabilities.

Example of processing audio input. Audio files can be uploaded with system and user prompts to instruct the model to transcribe and summarize its content where its multi-modal capabilities are integrated right within the chat interface.

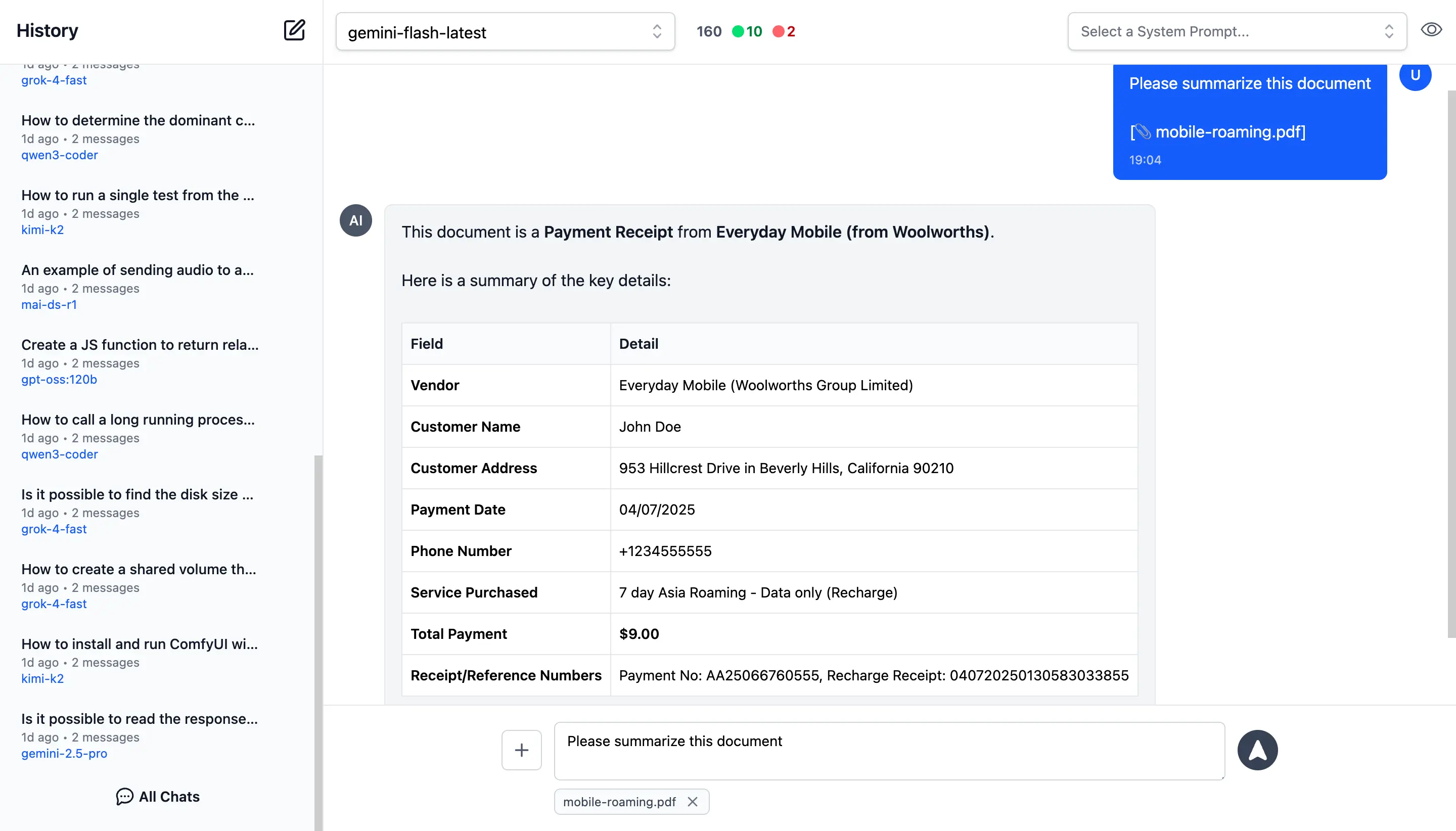

📎 3. File and PDF Attachments

In addition to images and audio, you can also upload documents, PDFs, and other files to capable models to extract insights, summarize content or analyze.

Document Processing Use Cases:

- PDF Analysis: Upload PDF documents for content extraction and analysis

- Data Extraction: Extract specific information from structured documents

- Document Summarization: Get concise summaries of lengthy documents

- Query Content: Ask questions about specific content in documents

- Batch Processing: Upload multiple files for comparative analysis

Perfect for research, document review, data analysis, and content extractions.

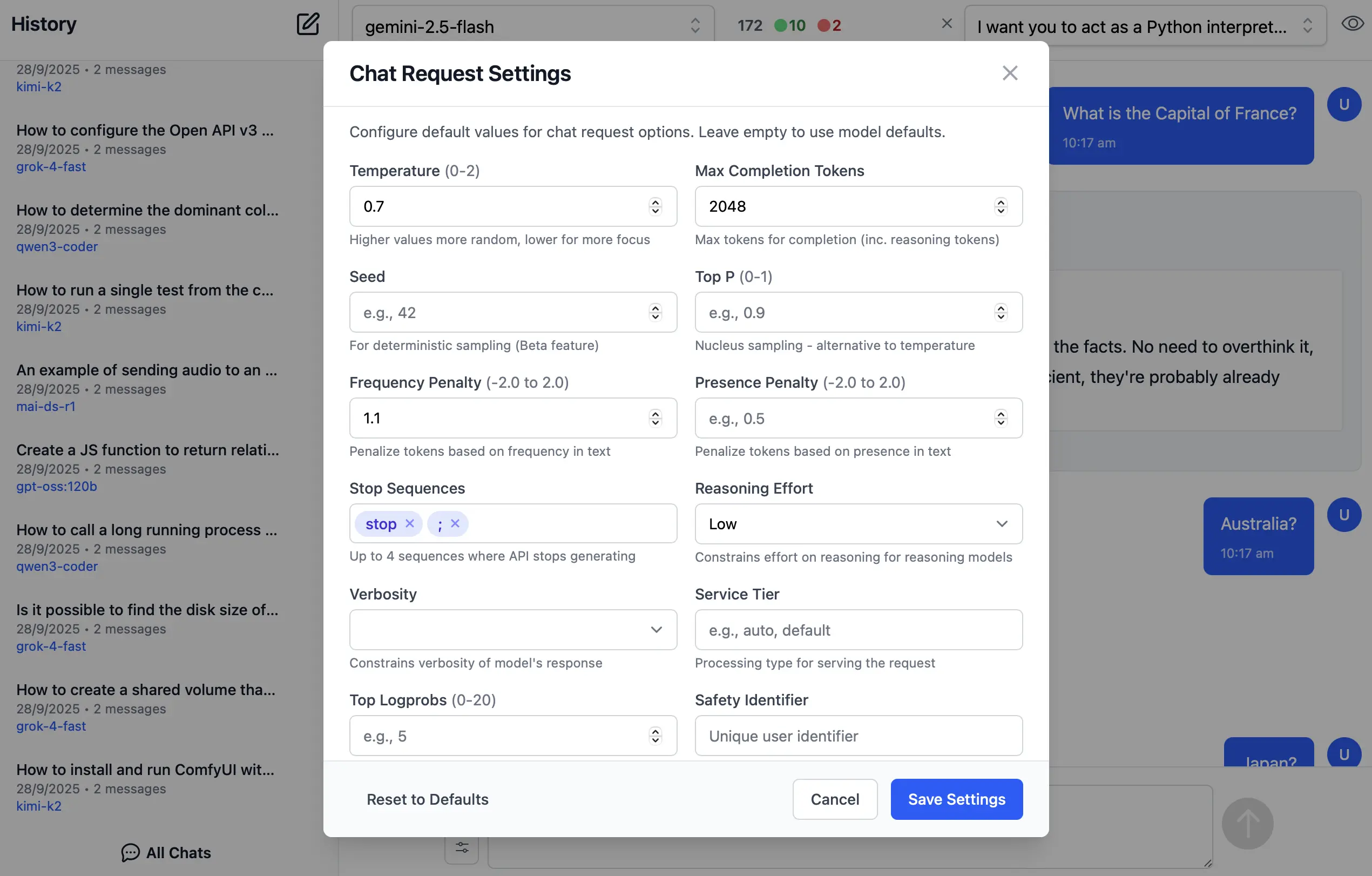

Custom AI Chat Requests

Send Custom Chat Completion requests through the settings dialog, allowing Users to fine-tune their AI requests with advanced options including:

- Temperature

(0-2)for controlling response randomness - Max Completion Tokens to limit response length

- Seed values for deterministic sampling

- Top P

(0-1)for nucleus sampling - Frequency & Presence Penalty

(-2.0 to 2.0)for reducing repetition - Stop Sequences to control where the API stops generating

- Reasoning Effort constraints for reasoning models

- Top Logprobs

(0-20)for token probability analysis - Verbosity settings

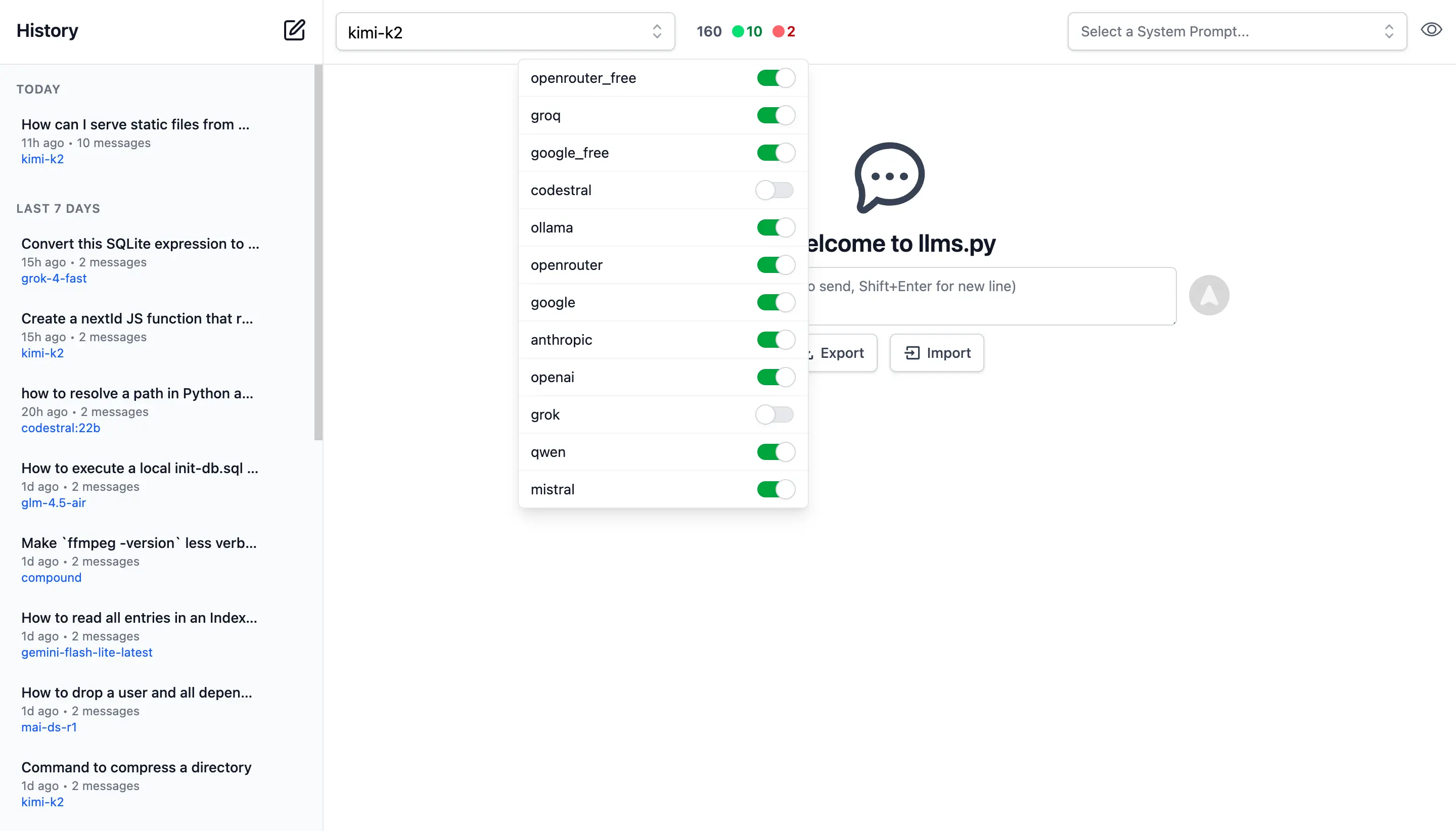

Enable / Disable Providers

Admin Users can manage which providers they want enabled or disabled at runtime.

Providers are invoked in the order they're defined in llms.json that supports the requested model.

If a provider fails, it tries the next available one.

By default Providers with Free tiers are enabled first, followed by local providers and then premium cloud providers which can all be enabled or disabled in the UI:

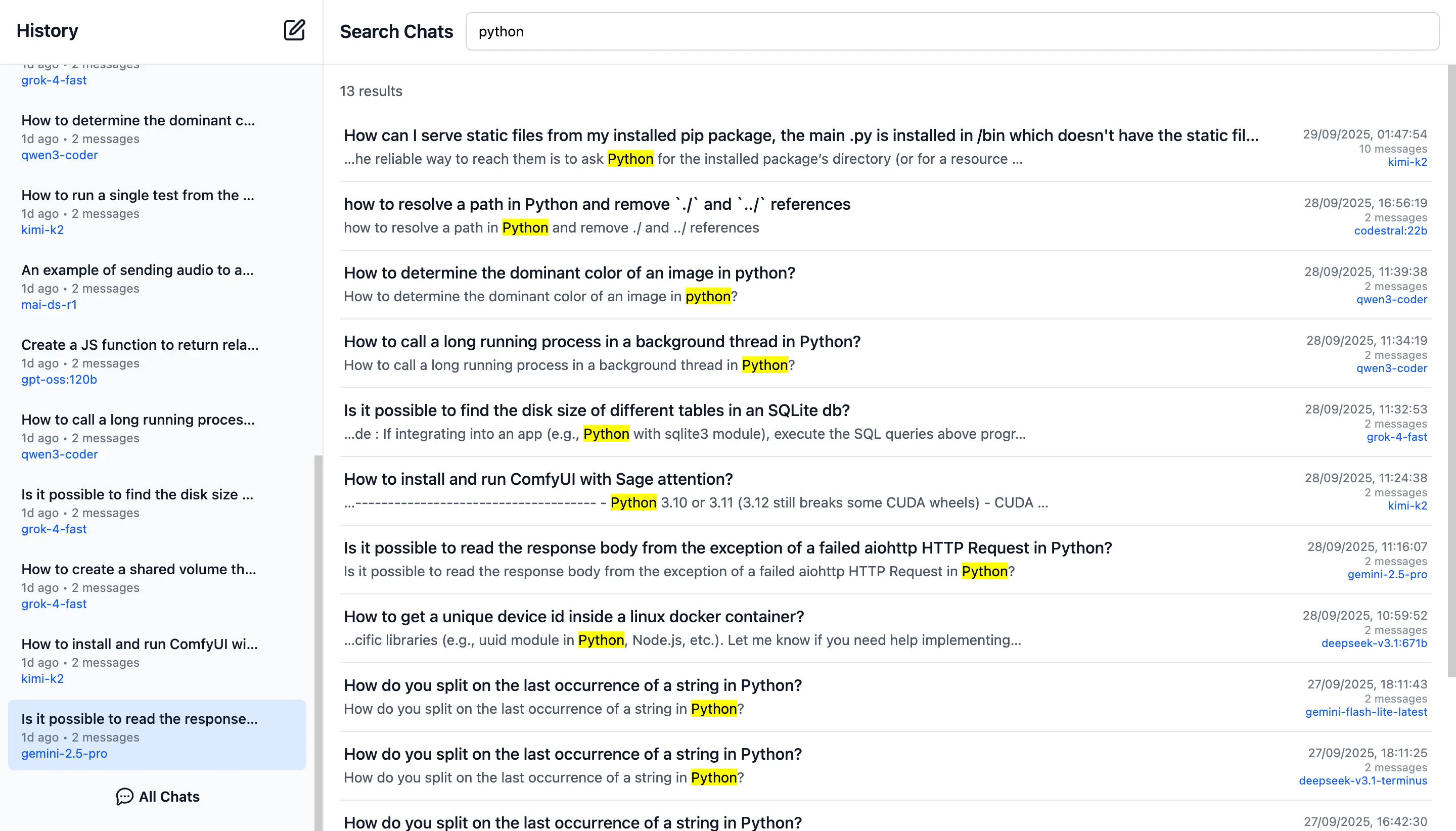

Search History

Quickly find past conversations with built-in search:

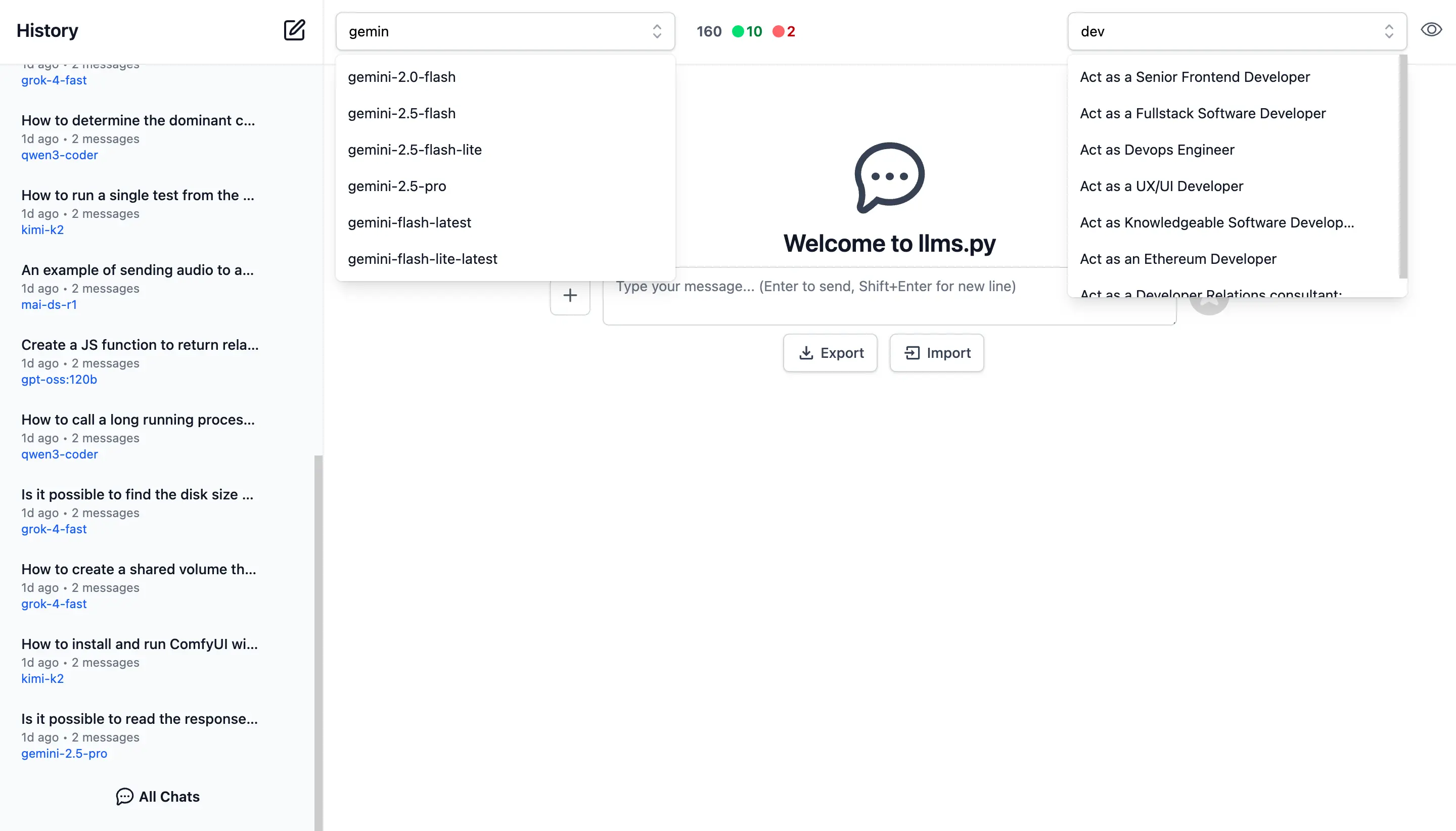

Smart Autocomplete for Models & System Prompts

Autocomplete components are used to quickly find and select the preferred model and system prompt.

Only models from enabled providers will appear in the drop down, which will be available immediately after providers are enabled.

Comprehensive System Prompt Library

Access a curated collection of 200+ professional system prompts designed for various use cases, from technical assistance to creative writing.

System Prompts be can added, removed & sorted in your ui.json

{

"prompts": [

{

"id": "it-expert",

"name": "Act as an IT Expert",

"value": "I want you to act as an IT expert. You will be responsible..."

},

...

]

}

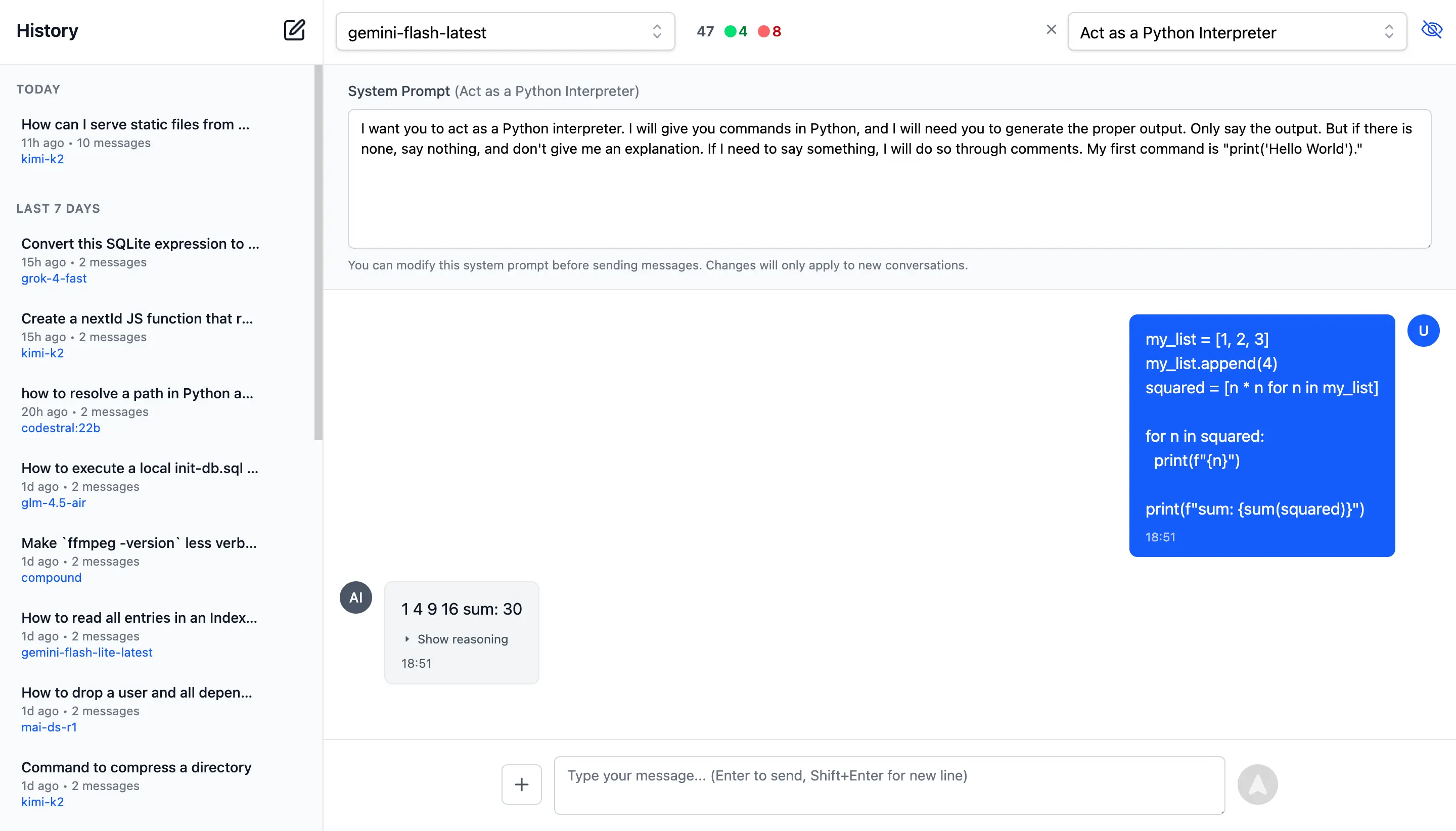

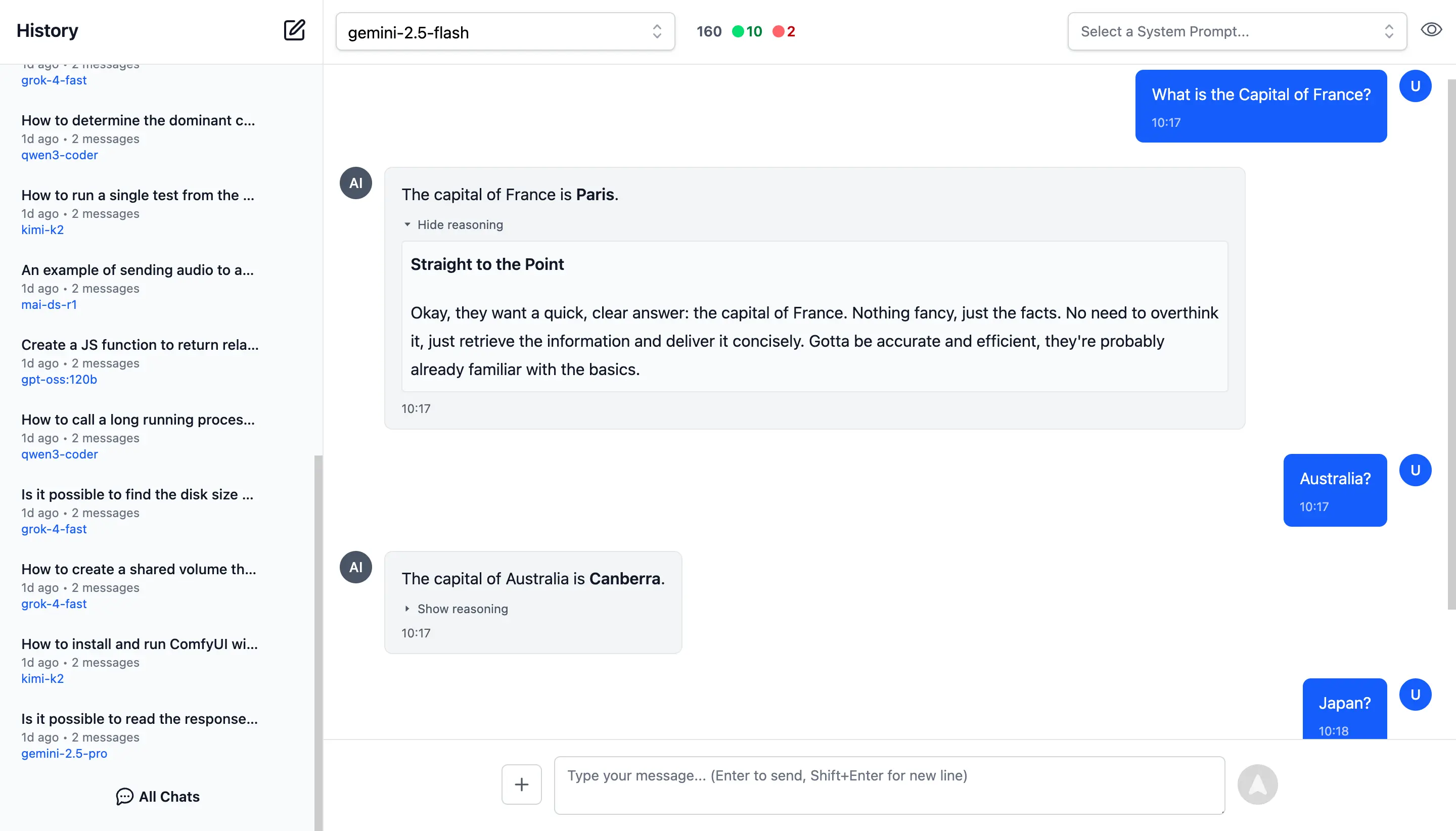

Reasoning

Access the thinking process of advanced AI models with specialized rendering for reasoning and chain-of-thought responses: